The results for the wikipediaMM task have been computed with the trec_eval tool (version 8.1). Please use this tool in combination with the sources provided below.

The submitted runs have been corrected (where necessary) so as to correpond to valid runs in the correct TREC format. The following corrections have been made:

- The runs comply with the TREC fomat as specified in the submission guidelines for the task

- When a topic contains an image example that is part of the wikipediaMM collection, this image is removed from the retrieval results, i.e., we are seeking relevant images that the users are not familiar with (as they are with the images they provided as examples).

- When an image is retrieved more than once for a given topic, only its highest ranking for that topic is kept and the rest are removed (and the ranks in the retrieval results are appropriately fixed).

| Results |

The evaluation results for all submitted runs in the wikipediaMM taks can be found below.

For the evaluation results for the runs submitted by each participant please select the appropriate group from the following list:

cea chemnitz curien cwi imperial irit sztaki ualicante ugeneva upeking upmc-lip6 utoulon

The evaluation results for all submitted runs for the wikipediaMM task can be found below:

Summary statistics for all runs sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 1 |

upeking |

zzhou3 |

AUTO |

TXT |

QE |

0.3444 |

0.5733 |

0.4760 |

0.3794 |

0.3610 |

18839 |

3406 |

| 2 |

cea |

ceaTxtCon |

AUTO |

TXTCON |

QE |

0.2735 |

0.5467 |

0.4653 |

0.3225 |

0.2981 |

12577 |

2186 |

| 3 |

ualicante |

IRnNoCamel |

AUTO |

TXT |

NOFB |

0.2700 |

0.4533 |

0.3893 |

0.3075 |

0.2728 |

47972 |

3497 |

| 4 |

cea |

ceaTxt |

AUTO |

TXT |

QE |

0.2632 |

0.5200 |

0.4427 |

0.3080 |

0.2837 |

12577 |

2184 |

| 5 |

ualicante |

IRnNoCamelLca |

AUTO |

TXT |

FB |

0.2614 |

0.4000 |

0.3587 |

0.2950 |

0.2577 |

74167 |

3624 |

| 6 |

ualicante |

IRnNoCamelGeog |

AUTO |

TXT |

QE |

0.2605 |

0.4267 |

0.3640 |

0.3000 |

0.2646 |

48948 |

3423 |

| 7 |

ualicante |

IRnConcSinCamLca |

AUTO |

TXTCON |

FB |

0.2593 |

0.3973 |

0.3493 |

0.3016 |

0.2617 |

73287 |

3538 |

| 8 |

ualicante |

IRnConcSinCam |

AUTO |

TXTCON |

NOFB |

0.2587 |

0.4240 |

0.3627 |

0.2975 |

0.2631 |

55663 |

3505 |

| 9 |

ualicante |

IRnNoCamelLcaGeog |

AUTO |

TXT |

FBQE |

0.2583 |

0.4000 |

0.3613 |

0.2922 |

0.2548 |

74167 |

3591 |

| 10 |

sztaki |

bp_acad_textonly |

AUTO |

TXT |

NOFB |

0.2551 |

0.4400 |

0.3653 |

0.3020 |

0.2711 |

48847 |

3338 |

| 11 |

sztaki |

bp_acad_textonly_qe |

AUTO |

TXT |

QE |

0.2546 |

0.4320 |

0.3720 |

0.2993 |

0.2740 |

74984 |

3348 |

| 12 |

ualicante |

IRnConcSinCamLcaGeog |

AUTO |

TXTCON |

FBQE |

0.2537 |

0.3920 |

0.3440 |

0.2940 |

0.2568 |

73287 |

3524 |

| 13 |

cwi |

cwi_lm_txt |

AUTO |

TXT |

NOFB |

0.2528 |

0.3840 |

0.3427 |

0.3080 |

0.2673 |

47962 |

3408 |

| 14 |

sztaki |

bp_acad_avgw_glob10 |

AUTO |

TXTIMG |

NOFB |

0.2526 |

0.4320 |

0.3640 |

0.2955 |

0.2671 |

48830 |

3332 |

| 15 |

sztaki |

bp_acad_avgw_glob10_qe |

AUTO |

TXTIMG |

QE |

0.2514 |

0.4240 |

0.3693 |

0.2939 |

0.2702 |

73984 |

3339 |

| 16 |

ualicante |

IRnConcSinCamGeog |

AUTO |

TXTCON |

QE |

0.2509 |

0.4133 |

0.3427 |

0.2924 |

0.2556 |

56254 |

3447 |

| 17 |

sztaki |

bp_acad_glob10_qe |

AUTO |

TXTIMG |

QE |

0.2502 |

0.4213 |

0.3653 |

0.2905 |

0.2686 |

73984 |

3339 |

| 18 |

sztaki |

bp_acad_glob10 |

AUTO |

TXTIMG |

NOFB |

0.2497 |

0.4400 |

0.3627 |

0.2955 |

0.2646 |

48830 |

3332 |

| 19 |

cwi |

cwi_lm__lprior_txt |

AUTO |

TXT |

NOFB |

0.2493 |

0.4293 |

0.3467 |

0.2965 |

0.2622 |

47962 |

3391 |

| 20 |

sztaki |

bp_acad_avg5 |

AUTO |

TXTIMG |

NOFB |

0.2491 |

0.4480 |

0.3640 |

0.2970 |

0.2668 |

48540 |

3321 |

| 21 |

sztaki |

bp_acad_avgw_qe |

AUTO |

TXTIMG |

QE |

0.2465 |

0.4160 |

0.3640 |

0.2887 |

0.2661 |

73984 |

3339 |

| 22 |

curien |

LaHC_run01 |

AUTO |

TXT |

NOFB |

0.2453 |

0.4027 |

0.3680 |

0.2905 |

0.2532 |

54638 |

3467 |

| 23 |

ualicante |

IRnConcSinCamPrf |

AUTO |

TXTCON |

FB |

0.2326 |

0.2853 |

0.2840 |

0.2673 |

0.2280 |

58863 |

3589 |

| 24 |

ualicante |

IRnNoCamelPrf |

AUTO |

TXT |

FB |

0.2321 |

0.3120 |

0.3107 |

0.2665 |

0.2274 |

53472 |

3622 |

| 25 |

ualicante |

IRnNoCamelPrfGeog |

AUTO |

TXT |

FBQE |

0.2287 |

0.3147 |

0.3120 |

0.2611 |

0.2230 |

54441 |

3561 |

| 26 |

ualicante |

IRnConcSinCamPrfGeog |

AUTO |

TXTCON |

FBQE |

0.2238 |

0.2880 |

0.2853 |

0.2561 |

0.2180 |

59413 |

3556 |

| 27 |

chemnitz |

cut-mix-qe |

AUTO |

TXTIMGCON |

QE |

0.2195 |

0.4080 |

0.3627 |

0.2734 |

0.2400 |

52623 |

3111 |

| 28 |

ualicante |

IRnConcepto |

AUTO |

TXTCON |

NOFB |

0.2183 |

0.3760 |

0.3213 |

0.2574 |

0.2274 |

52863 |

3233 |

| 29 |

ualicante |

IRn |

AUTO |

TXT |

NOFB |

0.2178 |

0.3760 |

0.3200 |

0.2569 |

0.2269 |

52863 |

3231 |

| 30 |

chemnitz |

cut-txt-a |

AUTO |

TXT |

NOFB |

0.2166 |

0.3760 |

0.3440 |

0.2695 |

0.2352 |

52623 |

3111 |

| 31 |

ualicante |

IRnConceptoLca |

AUTO |

TXTCON |

FB |

0.2158 |

0.3333 |

0.3053 |

0.2573 |

0.2278 |

74724 |

3304 |

| 32 |

chemnitz |

cut-mix |

AUTO |

TXTIMG |

NOFB |

0.2138 |

0.4027 |

0.3467 |

0.2645 |

0.2312 |

52623 |

3111 |

| 33 |

ualicante |

IRnConceptoGeog |

AUTO |

TXTCON |

QE |

0.2094 |

0.3627 |

0.3040 |

0.2479 |

0.2169 |

53350 |

3208 |

| 34 |

ualicante |

IRn_Geog |

AUTO |

TXT |

QE |

0.2091 |

0.3653 |

0.3040 |

0.2474 |

0.2166 |

53350 |

3207 |

| 35 |

ualicante |

IRnConceptoPrf |

AUTO |

TXTCON |

FB |

0.2083 |

0.3333 |

0.3227 |

0.2536 |

0.2177 |

74876 |

3302 |

| 36 |

ualicante |

IRnConceptoLcaGeog |

AUTO |

TXTCON |

FBQE |

0.2067 |

0.3253 |

0.2987 |

0.2475 |

0.2189 |

74268 |

3265 |

| 37 |

ualicante |

IRn_Lca |

AUTO |

TXT |

FB |

0.2053 |

0.3227 |

0.2947 |

0.2530 |

0.2179 |

74643 |

3293 |

| 38 |

chemnitz |

cut-mix-concepts |

AUTO |

TXTIMGCON |

NOFB |

0.2048 |

0.4133 |

0.3493 |

0.2552 |

0.2202 |

70803 |

3134 |

| 39 |

ualicante |

IRn_PRF |

AUTO |

TXT |

FB |

0.2033 |

0.3147 |

0.3053 |

0.2341 |

0.2016 |

67017 |

3279 |

| 40 |

ualicante |

IRn_LcaGeog |

AUTO |

TXT |

FBQE |

0.2010 |

0.3200 |

0.2987 |

0.2472 |

0.2131 |

74643 |

3274 |

| 41 |

ualicante |

IRn_PrfGeog |

AUTO |

TXT |

FBQE |

0.2003 |

0.3040 |

0.3040 |

0.2293 |

0.1977 |

67500 |

3264 |

| 42 |

ualicante |

IRnConceptoPrfGeog |

AUTO |

TXTCON |

FBQE |

0.1997 |

0.3307 |

0.3093 |

0.2466 |

0.2083 |

74876 |

3281 |

| 43 |

upeking |

ynli_tliu1.1 |

AUTO |

IMG |

FBQE |

0.1928 |

0.5307 |

0.4507 |

0.2295 |

0.2232 |

23526 |

1310 |

| 44 |

imperial |

SimpleText |

AUTO |

TXT |

NOFB |

0.1918 |

0.3707 |

0.3240 |

0.2362 |

0.2086 |

47784 |

2983 |

| 45 |

upeking |

ynli_tliu1 |

AUTO |

IMG |

QE |

0.1912 |

0.5333 |

0.4480 |

0.2309 |

0.2221 |

23526 |

1310 |

| 46 |

imperial |

TextGeoContext |

AUTO |

TXT |

NOFB |

0.1896 |

0.3760 |

0.3280 |

0.2358 |

0.2052 |

47784 |

2983 |

| 47 |

imperial |

TextGeoNoContext2 |

AUTO |

TXT |

NOFB |

0.1896 |

0.3760 |

0.3280 |

0.2357 |

0.2052 |

47784 |

2983 |

| 48 |

irit |

SigRunText |

AUTO |

TXT |

NOFB |

0.1652 |

0.3067 |

0.2880 |

0.2148 |

0.1773 |

11796 |

1462 |

| 49 |

irit |

SigRunImage |

AUTO |

TXT |

NOFB |

0.1595 |

0.3147 |

0.2787 |

0.2211 |

0.1798 |

10027 |

1441 |

| 50 |

upeking |

zhou1 |

AUTO |

TXT |

NOFB |

0.1525 |

0.3333 |

0.2573 |

0.1726 |

0.1665 |

2674 |

1093 |

| 51 |

upeking |

zhou1.1 |

AUTO |

TXT |

FB |

0.1498 |

0.3253 |

0.2467 |

0.1717 |

0.1640 |

2674 |

1093 |

| 52 |

ugeneva |

unige_text_baseline |

AUTO |

TXT |

NOFB |

0.1440 |

0.2320 |

0.2053 |

0.1806 |

0.1436 |

74908 |

3435 |

| 53 |

imperial |

ImageText |

AUTO |

TXTIMG |

NOFB |

0.1225 |

0.2293 |

0.2213 |

0.1718 |

0.1371 |

63895 |

2836 |

| 54 |

imperial |

ImageTextBRF |

AUTO |

TXTIMG |

FB |

0.1225 |

0.2293 |

0.2213 |

0.1718 |

0.1371 |

63895 |

2836 |

| 55 |

irit |

SigRunComb |

AUTO |

TXT |

NOFB |

0.1193 |

0.2133 |

0.1907 |

0.1612 |

0.1257 |

19877 |

1919 |

| 56 |

upmc-lip6 |

TFUSION_TFIDF_LM |

AUTO |

TXT |

NOFB |

0.1193 |

0.2400 |

0.2160 |

0.1581 |

0.1280 |

30632 |

1792 |

| 57 |

curien |

LaHC_run03 |

MANUAL |

TXTIMG |

FB |

0.1174 |

0.4080 |

0.2613 |

0.1530 |

0.1378 |

74986 |

1004 |

| 58 |

curien |

LaHC_run05 |

AUTO |

TXTIMG |

FBQE |

0.1161 |

0.3840 |

0.2600 |

0.1493 |

0.1348 |

74986 |

987 |

| 59 |

upmc-lip6 |

TFIDF |

AUTO |

TXT |

NOFB |

0.1130 |

0.2187 |

0.1920 |

0.1572 |

0.1276 |

30863 |

1798 |

| 60 |

upmc-lip6 |

LM |

AUTO |

TXT |

NOFB |

0.1078 |

0.2533 |

0.1973 |

0.1507 |

0.1196 |

74990 |

1813 |

| 61 |

curien |

LaHC_run06 |

AUTO |

TXTIMG |

FB |

0.1067 |

0.3893 |

0.3280 |

0.1384 |

0.1234 |

1741 |

429 |

| 62 |

upmc-lip6 |

TIFUSION_LMTF_COS |

AUTO |

TXTIMG |

NOFB |

0.1050 |

0.2267 |

0.2267 |

0.1505 |

0.1157 |

30632 |

1795 |

| 63 |

upmc-lip6 |

TIFUSION_LMTF_MANIF |

AUTO |

TXTIMG |

NOFB |

0.1049 |

0.2293 |

0.2240 |

0.1501 |

0.1160 |

30632 |

1797 |

| 64 |

ugeneva |

text_fs |

AUTO |

TXT |

NOFB |

0.0917 |

0.1653 |

0.1667 |

0.1342 |

0.1068 |

7414 |

1005 |

| 65 |

curien |

LaHC_run04 |

MANUAL |

TXTIMG |

FBQE |

0.0760 |

0.2533 |

0.1813 |

0.0992 |

0.0909 |

74986 |

822 |

| 66 |

upmc-lip6 |

TI_LMTF_MANIF |

AUTO |

TXTIMG |

NOFB |

0.0744 |

0.1920 |

0.1920 |

0.1103 |

0.0886 |

14710 |

1283 |

| 67 |

irit |

SigRunImageName |

AUTO |

TXT |

NOFB |

0.0743 |

0.1813 |

0.1573 |

0.1146 |

0.0918 |

9598 |

830 |

| 68 |

upeking |

zhou_ynli_tliu1.1 |

AUTO |

TXTIMG |

FBQE |

0.0603 |

0.0027 |

0.0040 |

0.0323 |

0.0400 |

40819 |

3692 |

| 69 |

curien |

LaHC_run02 |

AUTO |

TXTIMG |

FB |

0.0577 |

0.2533 |

0.1613 |

0.0739 |

0.0648 |

74989 |

643 |

| 70 |

utoulon |

LSIS_TXT_method1 |

AUTO |

TXT |

NOFB |

0.0399 |

0.0507 |

0.0467 |

0.0583 |

0.0662 |

74989 |

2191 |

| 71 |

utoulon |

LSIS4-TXTIMGAUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0296 |

0.0347 |

0.0307 |

0.0421 |

0.0578 |

74988 |

2602 |

| 72 |

utoulon |

LSIS-IMGTXT-AUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0260 |

0.0267 |

0.0253 |

0.0349 |

0.0547 |

74988 |

2485 |

| 73 |

utoulon |

LSIS-TXTIMGAUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0233 |

0.0533 |

0.0480 |

0.0300 |

0.0542 |

74989 |

2435 |

| 74 |

upeking |

zhou_ynli_tliu1 |

AUTO |

TXTIMG |

QE |

0.0053 |

0.0027 |

0.0040 |

0.0049 |

0.0034 |

11619 |

367 |

| 75 |

imperial |

Image |

AUTO |

IMG |

NOFB |

0.0037 |

0.0187 |

0.0147 |

0.0108 |

0.0086 |

41982 |

207 |

| 76 |

utoulon |

LSIS-IMG-AUTONOFB |

AUTO |

IMG |

NOFB |

0.0020 |

0.0027 |

0.0027 |

0.0049 |

0.0242 |

74998 |

551 |

| 77 |

upmc-lip6 |

visualonly |

AUTO |

IMG |

NOFB |

0.0010 |

0.0107 |

0.0080 |

0.0053 |

0.0038 |

41986 |

157 |

|

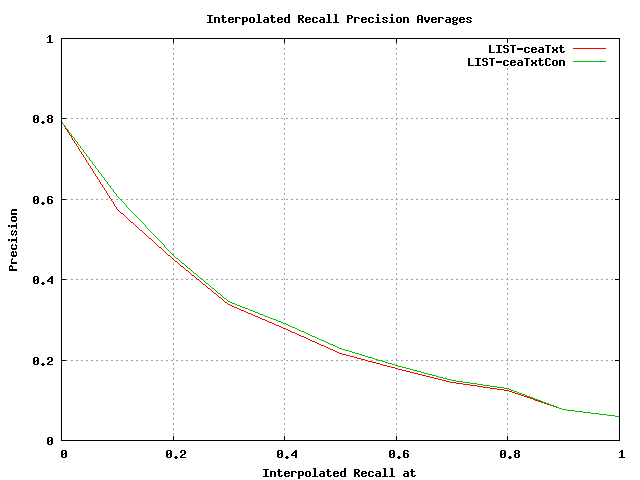

| cea |

The corrected runs submitted by cea for the wikipediaMM task are: submissions_cea.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

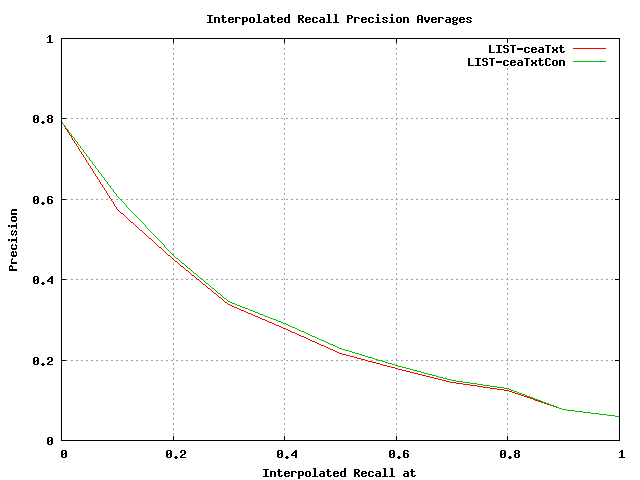

Interpolated Recall Precision Averages at eleven standard recall levels

| cea |

|

Summary statistics for the runs submitted by cea sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 2 |

cea |

ceaTxtCon |

AUTO |

TXTCON |

QE |

0.2735 |

0.5467 |

0.4653 |

0.3225 |

0.2981 |

12577 |

2186 |

| 4 |

cea |

ceaTxt |

AUTO |

TXT |

QE |

0.2632 |

0.5200 |

0.4427 |

0.3080 |

0.2837 |

12577 |

2184 |

|

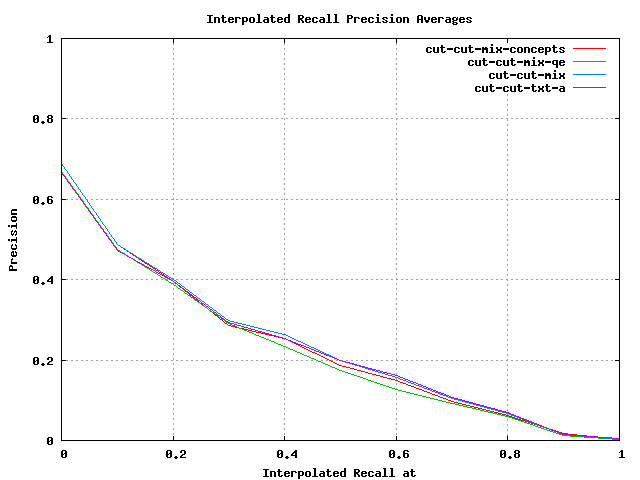

| chemnitz |

The corrected runs submitted by chemnitz for the wikipediaMM task are: submissions_chemnitz.tar.gz.

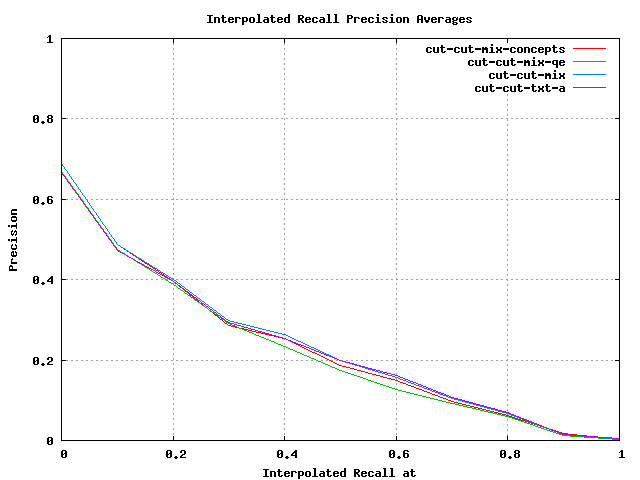

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| chemnitz |

|

Summary statistics for the runs submitted by chemnitz sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 27 |

chemnitz |

cut-mix-qe |

AUTO |

TXTIMGCON |

QE |

0.2195 |

0.4080 |

0.3627 |

0.2734 |

0.2400 |

52623 |

3111 |

| 30 |

chemnitz |

cut-txt-a |

AUTO |

TXT |

NOFB |

0.2166 |

0.3760 |

0.3440 |

0.2695 |

0.2352 |

52623 |

3111 |

| 32 |

chemnitz |

cut-mix |

AUTO |

TXTIMG |

NOFB |

0.2138 |

0.4027 |

0.3467 |

0.2645 |

0.2312 |

52623 |

3111 |

| 38 |

chemnitz |

cut-mix-concepts |

AUTO |

TXTIMGCON |

NOFB |

0.2048 |

0.4133 |

0.3493 |

0.2552 |

0.2202 |

70803 |

3134 |

|

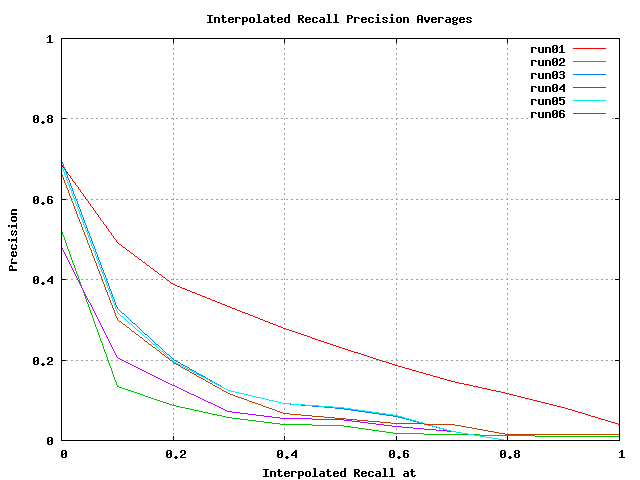

| curien |

The corrected runs submitted by curien for the wikipediaMM task are: submissions_curien.tar.gz.

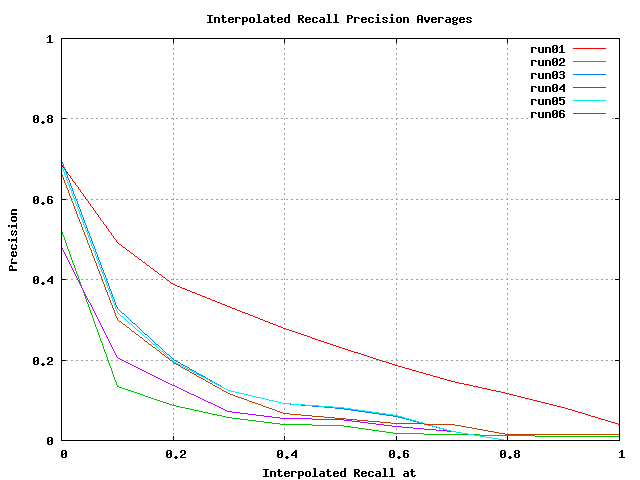

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| curien |

|

Summary statistics for the runs submitted by curien sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 22 |

curien |

LaHC_run01 |

AUTO |

TXT |

NOFB |

0.2453 |

0.4027 |

0.3680 |

0.2905 |

0.2532 |

54638 |

3467 |

| 57 |

curien |

LaHC_run03 |

MANUAL |

TXTIMG |

FB |

0.1174 |

0.4080 |

0.2613 |

0.1530 |

0.1378 |

74986 |

1004 |

| 58 |

curien |

LaHC_run05 |

AUTO |

TXTIMG |

FBQE |

0.1161 |

0.3840 |

0.2600 |

0.1493 |

0.1348 |

74986 |

987 |

| 61 |

curien |

LaHC_run06 |

AUTO |

TXTIMG |

FB |

0.1067 |

0.3893 |

0.3280 |

0.1384 |

0.1234 |

1741 |

429 |

| 65 |

curien |

LaHC_run04 |

MANUAL |

TXTIMG |

FBQE |

0.0760 |

0.2533 |

0.1813 |

0.0992 |

0.0909 |

74986 |

822 |

| 69 |

curien |

LaHC_run02 |

AUTO |

TXTIMG |

FB |

0.0577 |

0.2533 |

0.1613 |

0.0739 |

0.0648 |

74989 |

643 |

|

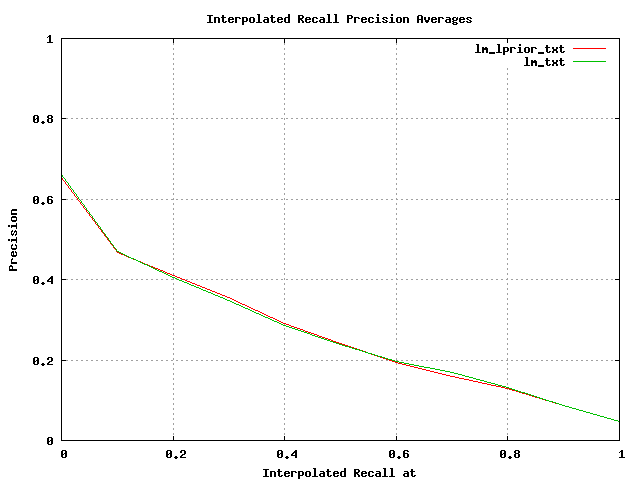

| cwi |

The corrected runs submitted by cwi for the wikipediaMM task are: submissions_cwi.tar.gz.

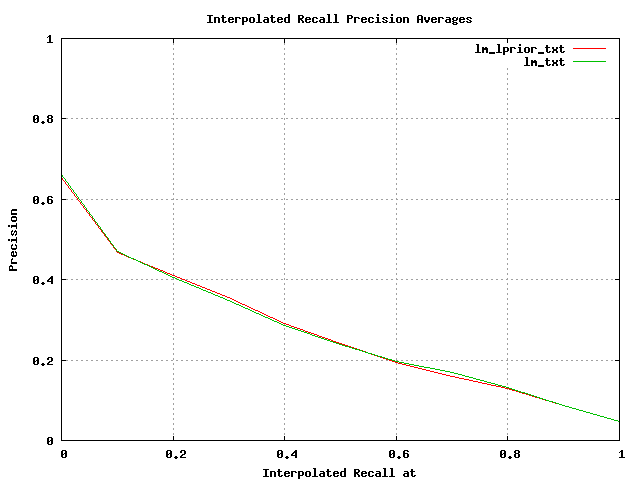

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| cwi |

|

Summary statistics for the runs submitted by cwi sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 13 |

cwi |

cwi_lm_txt |

AUTO |

TXT |

NOFB |

0.2528 |

0.3840 |

0.3427 |

0.3080 |

0.2673 |

47962 |

3408 |

| 19 |

cwi |

cwi_lm__lprior_txt |

AUTO |

TXT |

NOFB |

0.2493 |

0.4293 |

0.3467 |

0.2965 |

0.2622 |

47962 |

3391 |

|

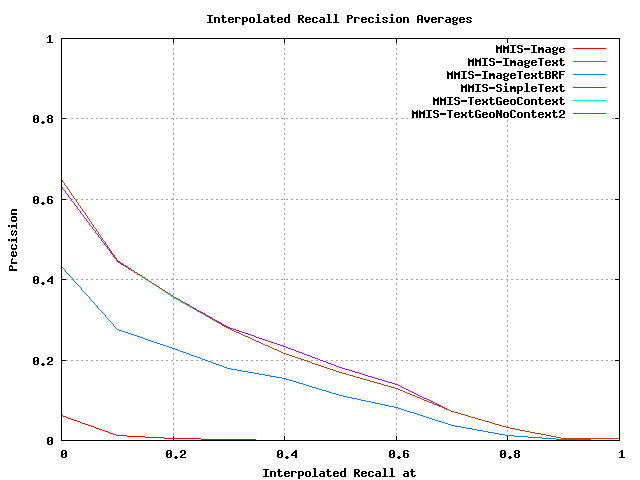

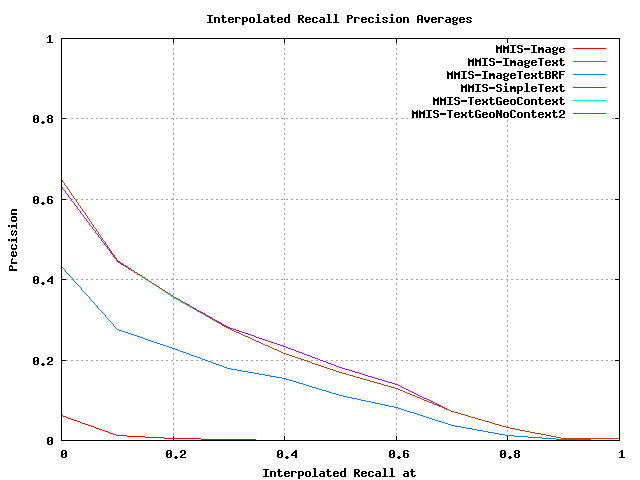

| imperial |

The corrected runs submitted by imperial for the wikipediaMM task are: submissions_imperial.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| imperial |

|

Summary statistics for the runs submitted by imperial sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 44 |

imperial |

SimpleText |

AUTO |

TXT |

NOFB |

0.1918 |

0.3707 |

0.3240 |

0.2362 |

0.2086 |

47784 |

2983 |

| 46 |

imperial |

TextGeoContext |

AUTO |

TXT |

NOFB |

0.1896 |

0.3760 |

0.3280 |

0.2358 |

0.2052 |

47784 |

2983 |

| 47 |

imperial |

TextGeoNoContext2 |

AUTO |

TXT |

NOFB |

0.1896 |

0.3760 |

0.3280 |

0.2357 |

0.2052 |

47784 |

2983 |

| 53 |

imperial |

ImageText |

AUTO |

TXTIMG |

NOFB |

0.1225 |

0.2293 |

0.2213 |

0.1718 |

0.1371 |

63895 |

2836 |

| 54 |

imperial |

ImageTextBRF |

AUTO |

TXTIMG |

FB |

0.1225 |

0.2293 |

0.2213 |

0.1718 |

0.1371 |

63895 |

2836 |

| 75 |

imperial |

Image |

AUTO |

IMG |

NOFB |

0.0037 |

0.0187 |

0.0147 |

0.0108 |

0.0086 |

41982 |

207 |

|

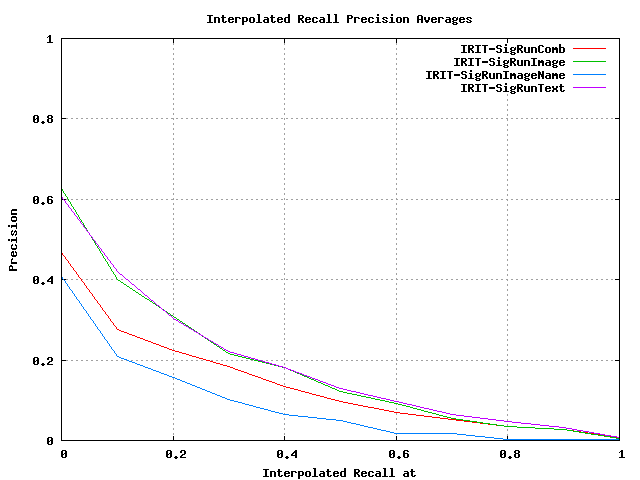

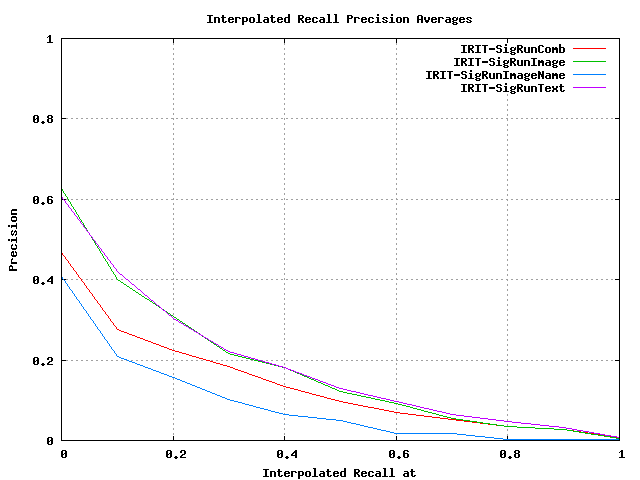

| irit |

The corrected runs submitted by irit for the wikipediaMM task are: submissions_irit.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| irit |

|

Summary statistics for the runs submitted by irit sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 48 |

irit |

SigRunText |

AUTO |

TXT |

NOFB |

0.1652 |

0.3067 |

0.2880 |

0.2148 |

0.1773 |

11796 |

1462 |

| 49 |

irit |

SigRunImage |

AUTO |

TXT |

NOFB |

0.1595 |

0.3147 |

0.2787 |

0.2211 |

0.1798 |

10027 |

1441 |

| 55 |

irit |

SigRunComb |

AUTO |

TXT |

NOFB |

0.1193 |

0.2133 |

0.1907 |

0.1612 |

0.1257 |

19877 |

1919 |

| 67 |

irit |

SigRunImageName |

AUTO |

TXT |

NOFB |

0.0743 |

0.1813 |

0.1573 |

0.1146 |

0.0918 |

9598 |

830 |

|

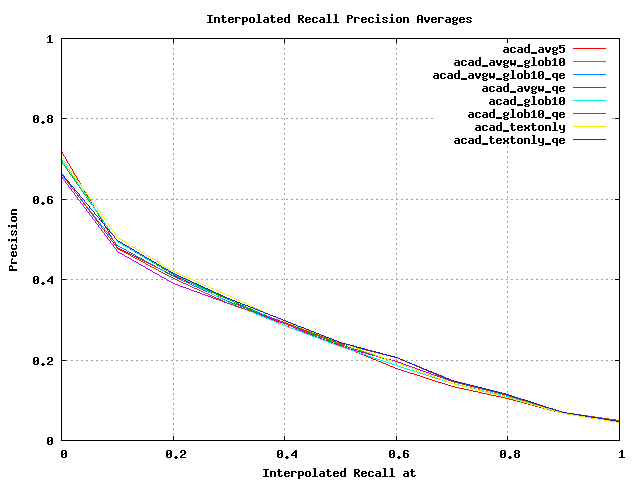

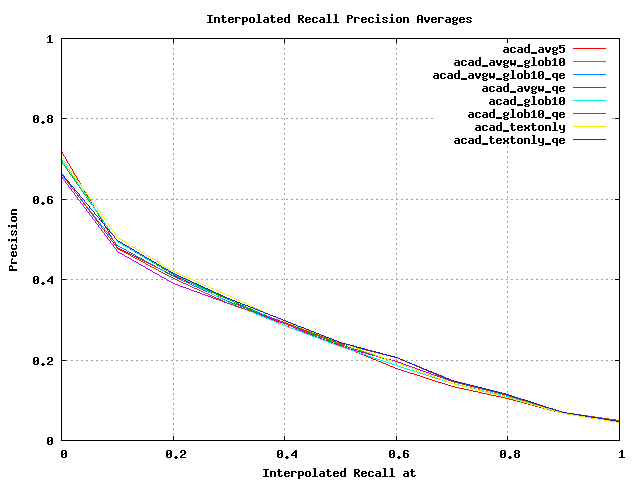

| sztaki |

The corrected runs submitted by sztaki for the wikipediaMM task are: submissions_sztaki.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| sztaki |

|

Summary statistics for the runs submitted by sztaki sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 10 |

sztaki |

bp_acad_textonly |

AUTO |

TXT |

NOFB |

0.2551 |

0.4400 |

0.3653 |

0.3020 |

0.2711 |

48847 |

3338 |

| 11 |

sztaki |

bp_acad_textonly_qe |

AUTO |

TXT |

QE |

0.2546 |

0.4320 |

0.3720 |

0.2993 |

0.2740 |

74984 |

3348 |

| 14 |

sztaki |

bp_acad_avgw_glob10 |

AUTO |

TXTIMG |

NOFB |

0.2526 |

0.4320 |

0.3640 |

0.2955 |

0.2671 |

48830 |

3332 |

| 15 |

sztaki |

bp_acad_avgw_glob10_qe |

AUTO |

TXTIMG |

QE |

0.2514 |

0.4240 |

0.3693 |

0.2939 |

0.2702 |

73984 |

3339 |

| 17 |

sztaki |

bp_acad_glob10_qe |

AUTO |

TXTIMG |

QE |

0.2502 |

0.4213 |

0.3653 |

0.2905 |

0.2686 |

73984 |

3339 |

| 18 |

sztaki |

bp_acad_glob10 |

AUTO |

TXTIMG |

NOFB |

0.2497 |

0.4400 |

0.3627 |

0.2955 |

0.2646 |

48830 |

3332 |

| 20 |

sztaki |

bp_acad_avg5 |

AUTO |

TXTIMG |

NOFB |

0.2491 |

0.4480 |

0.3640 |

0.2970 |

0.2668 |

48540 |

3321 |

| 21 |

sztaki |

bp_acad_avgw_qe |

AUTO |

TXTIMG |

QE |

0.2465 |

0.4160 |

0.3640 |

0.2887 |

0.2661 |

73984 |

3339 |

|

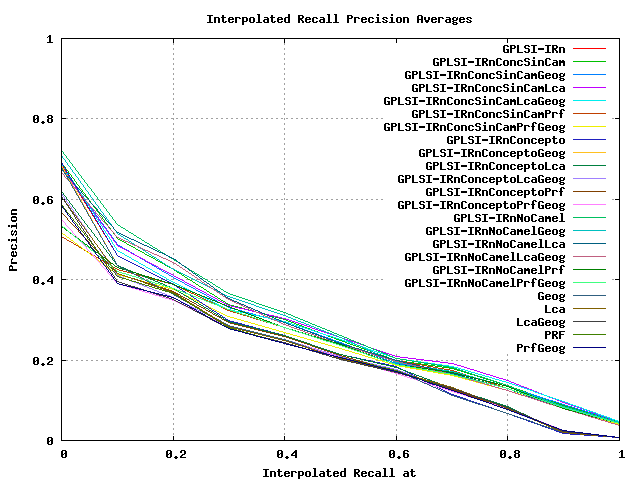

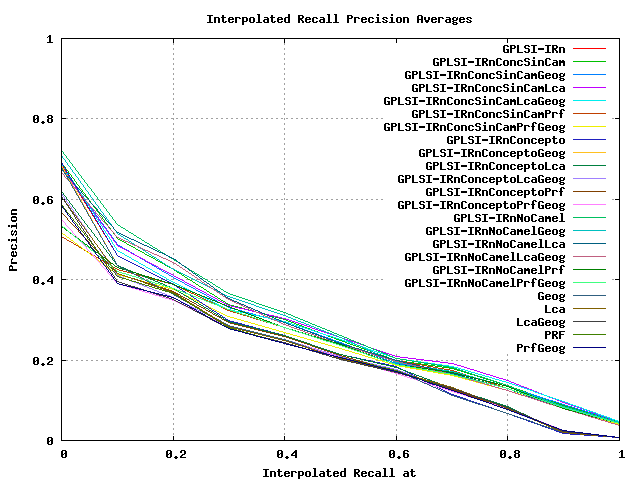

| ualicante |

The corrected runs submitted by ualicante for the wikipediaMM task are: submissions_ualicante.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| ualicante |

|

Summary statistics for the runs submitted by ualicante sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 3 |

ualicante |

IRnNoCamel |

AUTO |

TXT |

NOFB |

0.2700 |

0.4533 |

0.3893 |

0.3075 |

0.2728 |

47972 |

3497 |

| 5 |

ualicante |

IRnNoCamelLca |

AUTO |

TXT |

FB |

0.2614 |

0.4000 |

0.3587 |

0.2950 |

0.2577 |

74167 |

3624 |

| 6 |

ualicante |

IRnNoCamelGeog |

AUTO |

TXT |

QE |

0.2605 |

0.4267 |

0.3640 |

0.3000 |

0.2646 |

48948 |

3423 |

| 7 |

ualicante |

IRnConcSinCamLca |

AUTO |

TXTCON |

FB |

0.2593 |

0.3973 |

0.3493 |

0.3016 |

0.2617 |

73287 |

3538 |

| 8 |

ualicante |

IRnConcSinCam |

AUTO |

TXTCON |

NOFB |

0.2587 |

0.4240 |

0.3627 |

0.2975 |

0.2631 |

55663 |

3505 |

| 9 |

ualicante |

IRnNoCamelLcaGeog |

AUTO |

TXT |

FBQE |

0.2583 |

0.4000 |

0.3613 |

0.2922 |

0.2548 |

74167 |

3591 |

| 12 |

ualicante |

IRnConcSinCamLcaGeog |

AUTO |

TXTCON |

FBQE |

0.2537 |

0.3920 |

0.3440 |

0.2940 |

0.2568 |

73287 |

3524 |

| 16 |

ualicante |

IRnConcSinCamGeog |

AUTO |

TXTCON |

QE |

0.2509 |

0.4133 |

0.3427 |

0.2924 |

0.2556 |

56254 |

3447 |

| 23 |

ualicante |

IRnConcSinCamPrf |

AUTO |

TXTCON |

FB |

0.2326 |

0.2853 |

0.2840 |

0.2673 |

0.2280 |

58863 |

3589 |

| 24 |

ualicante |

IRnNoCamelPrf |

AUTO |

TXT |

FB |

0.2321 |

0.3120 |

0.3107 |

0.2665 |

0.2274 |

53472 |

3622 |

| 25 |

ualicante |

IRnNoCamelPrfGeog |

AUTO |

TXT |

FBQE |

0.2287 |

0.3147 |

0.3120 |

0.2611 |

0.2230 |

54441 |

3561 |

| 26 |

ualicante |

IRnConcSinCamPrfGeog |

AUTO |

TXTCON |

FBQE |

0.2238 |

0.2880 |

0.2853 |

0.2561 |

0.2180 |

59413 |

3556 |

| 28 |

ualicante |

IRnConcepto |

AUTO |

TXTCON |

NOFB |

0.2183 |

0.3760 |

0.3213 |

0.2574 |

0.2274 |

52863 |

3233 |

| 29 |

ualicante |

IRn |

AUTO |

TXT |

NOFB |

0.2178 |

0.3760 |

0.3200 |

0.2569 |

0.2269 |

52863 |

3231 |

| 31 |

ualicante |

IRnConceptoLca |

AUTO |

TXTCON |

FB |

0.2158 |

0.3333 |

0.3053 |

0.2573 |

0.2278 |

74724 |

3304 |

| 33 |

ualicante |

IRnConceptoGeog |

AUTO |

TXTCON |

QE |

0.2094 |

0.3627 |

0.3040 |

0.2479 |

0.2169 |

53350 |

3208 |

| 34 |

ualicante |

IRn_Geog |

AUTO |

TXT |

QE |

0.2091 |

0.3653 |

0.3040 |

0.2474 |

0.2166 |

53350 |

3207 |

| 35 |

ualicante |

IRnConceptoPrf |

AUTO |

TXTCON |

FB |

0.2083 |

0.3333 |

0.3227 |

0.2536 |

0.2177 |

74876 |

3302 |

| 36 |

ualicante |

IRnConceptoLcaGeog |

AUTO |

TXTCON |

FBQE |

0.2067 |

0.3253 |

0.2987 |

0.2475 |

0.2189 |

74268 |

3265 |

| 37 |

ualicante |

IRn_Lca |

AUTO |

TXT |

FB |

0.2053 |

0.3227 |

0.2947 |

0.2530 |

0.2179 |

74643 |

3293 |

| 39 |

ualicante |

IRn_PRF |

AUTO |

TXT |

FB |

0.2033 |

0.3147 |

0.3053 |

0.2341 |

0.2016 |

67017 |

3279 |

| 40 |

ualicante |

IRn_LcaGeog |

AUTO |

TXT |

FBQE |

0.2010 |

0.3200 |

0.2987 |

0.2472 |

0.2131 |

74643 |

3274 |

| 41 |

ualicante |

IRn_PrfGeog |

AUTO |

TXT |

FBQE |

0.2003 |

0.3040 |

0.3040 |

0.2293 |

0.1977 |

67500 |

3264 |

| 42 |

ualicante |

IRnConceptoPrfGeog |

AUTO |

TXTCON |

FBQE |

0.1997 |

0.3307 |

0.3093 |

0.2466 |

0.2083 |

74876 |

3281 |

|

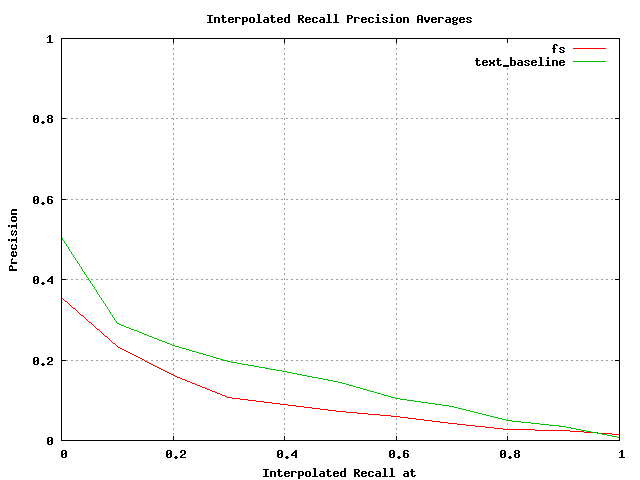

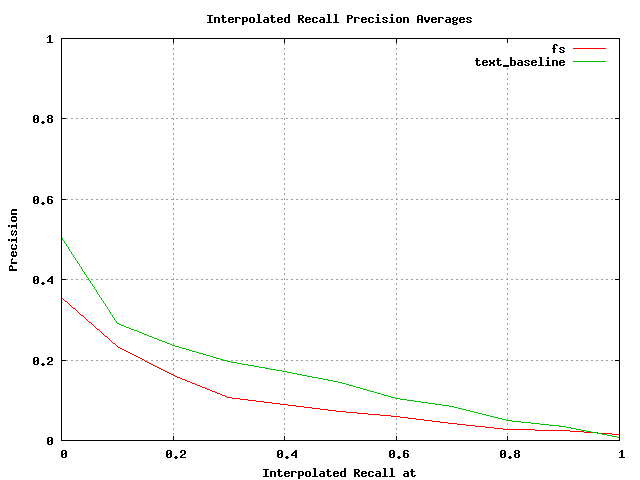

| ugeneva |

The corrected runs submitted by ugeneva for the wikipediaMM task are: submissions_ugeneva.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| ugeneva |

|

Summary statistics for the runs submitted by ugeneva sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 52 |

ugeneva |

unige_text_baseline |

AUTO |

TXT |

NOFB |

0.1440 |

0.2320 |

0.2053 |

0.1806 |

0.1436 |

74908 |

3435 |

| 64 |

ugeneva |

text_fs |

AUTO |

TXT |

NOFB |

0.0917 |

0.1653 |

0.1667 |

0.1342 |

0.1068 |

7414 |

1005 |

|

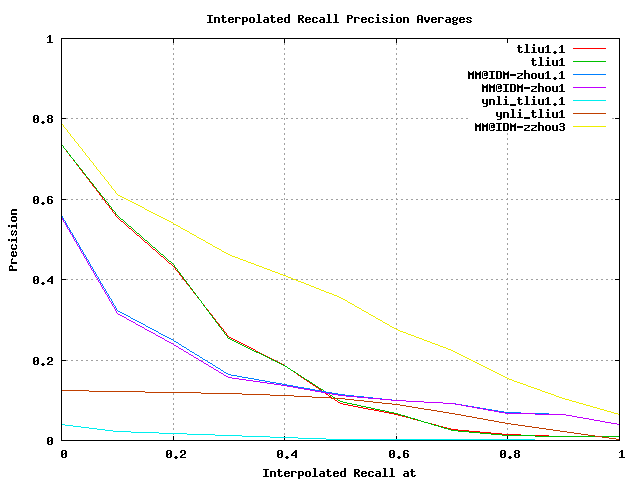

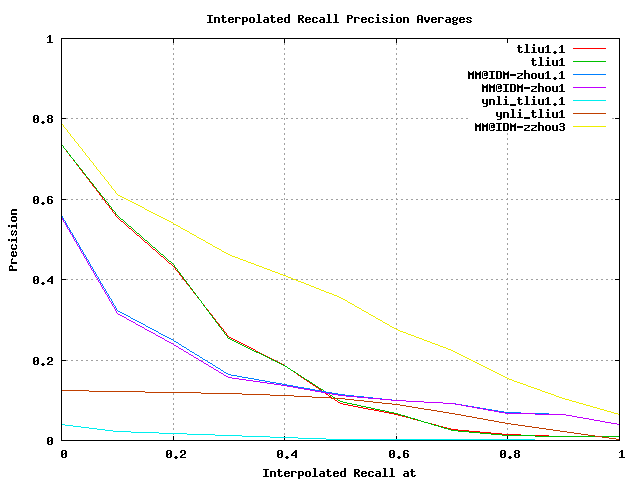

| upeking |

The corrected runs submitted by upeking for the wikipediaMM task are: submissions_upeking.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| upeking |

|

Summary statistics for the runs submitted by upeking sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 1 |

upeking |

zzhou3 |

AUTO |

TXT |

QE |

0.3444 |

0.5733 |

0.4760 |

0.3794 |

0.3610 |

18839 |

3406 |

| 43 |

upeking |

ynli_tliu1.1 |

AUTO |

IMG |

FBQE |

0.1928 |

0.5307 |

0.4507 |

0.2295 |

0.2232 |

23526 |

1310 |

| 45 |

upeking |

ynli_tliu1 |

AUTO |

IMG |

QE |

0.1912 |

0.5333 |

0.4480 |

0.2309 |

0.2221 |

23526 |

1310 |

| 50 |

upeking |

zhou1 |

AUTO |

TXT |

NOFB |

0.1525 |

0.3333 |

0.2573 |

0.1726 |

0.1665 |

2674 |

1093 |

| 51 |

upeking |

zhou1.1 |

AUTO |

TXT |

FB |

0.1498 |

0.3253 |

0.2467 |

0.1717 |

0.1640 |

2674 |

1093 |

| 68 |

upeking |

zhou_ynli_tliu1.1 |

AUTO |

TXTIMG |

FBQE |

0.0603 |

0.0027 |

0.0040 |

0.0323 |

0.0400 |

40819 |

3692 |

| 74 |

upeking |

zhou_ynli_tliu1 |

AUTO |

TXTIMG |

QE |

0.0053 |

0.0027 |

0.0040 |

0.0049 |

0.0034 |

11619 |

367 |

|

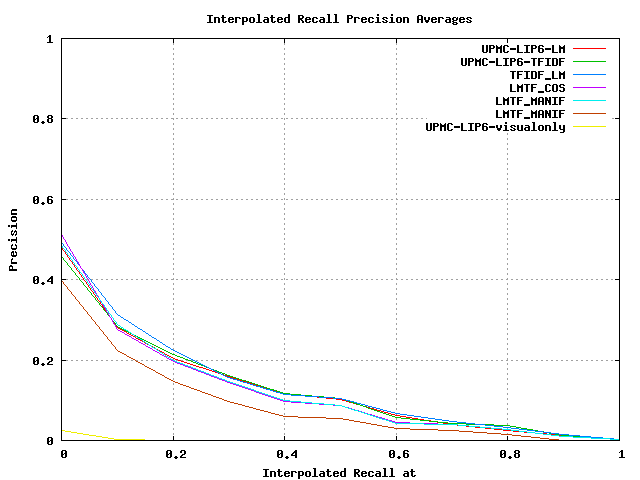

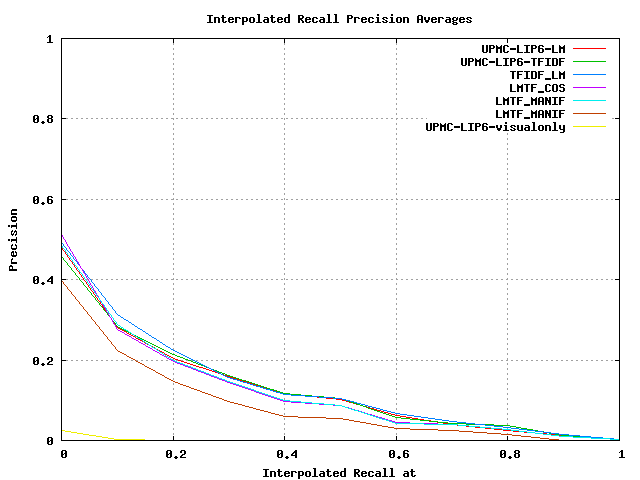

| upmc-lip6 |

The corrected runs submitted by upmc-lip6 for the wikipediaMM task are: submissions_upmc-lip6.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| upmc-lip6 |

|

Summary statistics for the runs submitted by upmc-lip6 sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 56 |

upmc-lip6 |

TFUSION_TFIDF_LM |

AUTO |

TXT |

NOFB |

0.1193 |

0.2400 |

0.2160 |

0.1581 |

0.1280 |

30632 |

1792 |

| 59 |

upmc-lip6 |

TFIDF |

AUTO |

TXT |

NOFB |

0.1130 |

0.2187 |

0.1920 |

0.1572 |

0.1276 |

30863 |

1798 |

| 60 |

upmc-lip6 |

LM |

AUTO |

TXT |

NOFB |

0.1078 |

0.2533 |

0.1973 |

0.1507 |

0.1196 |

74990 |

1813 |

| 62 |

upmc-lip6 |

TIFUSION_LMTF_COS |

AUTO |

TXTIMG |

NOFB |

0.1050 |

0.2267 |

0.2267 |

0.1505 |

0.1157 |

30632 |

1795 |

| 63 |

upmc-lip6 |

TIFUSION_LMTF_MANIF |

AUTO |

TXTIMG |

NOFB |

0.1049 |

0.2293 |

0.2240 |

0.1501 |

0.1160 |

30632 |

1797 |

| 66 |

upmc-lip6 |

TI_LMTF_MANIF |

AUTO |

TXTIMG |

NOFB |

0.0744 |

0.1920 |

0.1920 |

0.1103 |

0.0886 |

14710 |

1283 |

| 77 |

upmc-lip6 |

visualonly |

AUTO |

IMG |

NOFB |

0.0010 |

0.0107 |

0.0080 |

0.0053 |

0.0038 |

41986 |

157 |

|

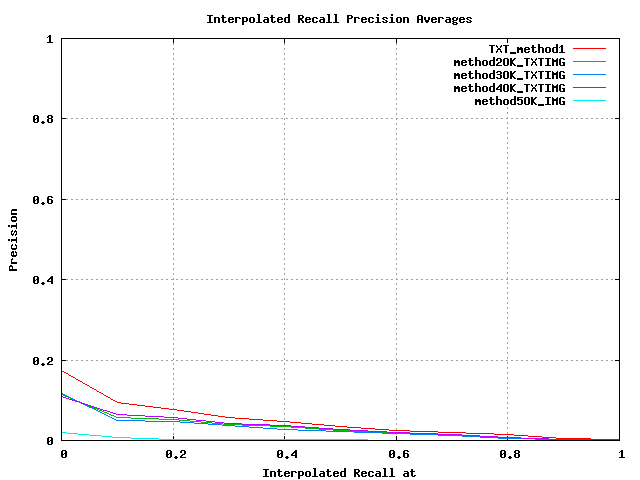

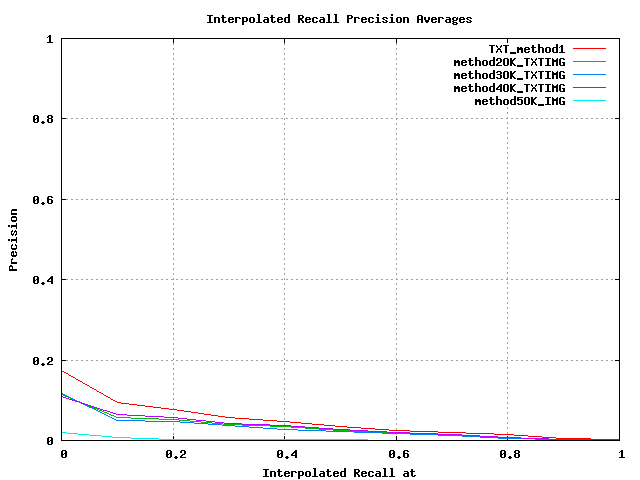

| utoulon |

The corrected runs submitted by utoulon for the wikipediaMM task are: submissions_utoulon.tar.gz.

These are the evaluation results for these runs as produced by trec_eval:

Interpolated Recall Precision Averages at eleven standard recall levels

| utoulon |

|

Summary statistics for the runs submitted by utoulon sorted by MAP

|

Participant |

Run |

Type |

Modality |

Feedback/Expansion |

MAP |

P@5 |

P@10 |

R-prec. |

Bpref |

Number of retrieved documents |

Number of relevant retrieved documents |

| 70 |

utoulon |

LSIS_TXT_method1 |

AUTO |

TXT |

NOFB |

0.0399 |

0.0507 |

0.0467 |

0.0583 |

0.0662 |

74989 |

2191 |

| 71 |

utoulon |

LSIS4-TXTIMGAUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0296 |

0.0347 |

0.0307 |

0.0421 |

0.0578 |

74988 |

2602 |

| 72 |

utoulon |

LSIS-IMGTXT-AUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0260 |

0.0267 |

0.0253 |

0.0349 |

0.0547 |

74988 |

2485 |

| 73 |

utoulon |

LSIS-TXTIMGAUTONOFB |

AUTO |

TXTIMG |

NOFB |

0.0233 |

0.0533 |

0.0480 |

0.0300 |

0.0542 |

74989 |

2435 |

| 76 |

utoulon |

LSIS-IMG-AUTONOFB |

AUTO |

IMG |

NOFB |

0.0020 |

0.0027 |

0.0027 |

0.0049 |

0.0242 |

74998 |

551 |

|