- ImageCLEF 2025

- LifeCLEF 2025

- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

Wikipedia retrieval task 2011

|

| Registration |

|

Registration is now closed. |

| Introduction |

| ImageCLEF's Wikipedia Retrieval task provides a testbed for the system-oriented evaluation of visual information retrieval from a collection of Wikipedia images and articles. The aim is to investigate retrieval approaches in the context of a large and heterogeneous collection of images and their noisy text annotations (similar to those encountered on the Web) that are searched for by users with diverse information needs. This diversity is simulated on behalf of the various topics covered by the queries as well as the different types of queries that are supposedly better solved by textual, visual or multimodal retrieval. In 2011, the task uses the ImageCLEF 2010 Wikipedia Collection (Popescu et al., 2010), which contains 237,434 Wikipedia images that cover diverse topics of interest. These images are associated with unstructured and noisy textual annotations in English, French, and German. (Popescu et al., 2010) A. Popescu, T. Tsikrika and J. Kludas Overview of the Wikipedia Retrieval Task at ImageCLEF 2010. In CLEF (Notebook Papers/LABs/Workshops) 2010.

|

| ImageCLEF 2010 Wikipedia Collection |

|

The ImageCLEF 2010 Wikipedia collection consists of 237,434 images and associated user-supplied annotations. The collection was built to cover similar topics in English, German and French. Topical similarity was obtained by selecting only Wikipedia articles which have versions in all three languages and are illustrated with at least one image in each version: 44,664 such articles were extracted from the September 2009 Wikipedia dumps, containing a total number of 265,987 images. Since the collection is intended to be freely distributed, we decided to remove all images with unclear copyright status. After this operation, duplicate elimination and some additional cleaning up, the remaining number of images in the collection is 237,434, with the following language distribution: -English only: 70,127 -German only: 50,291 -French only: 28,461 -English and German: 26,880 -English and French: 20,747 -German and French: 9,646 -English, German and French: 22,899 -Language undetermined: 8,144 -No textual annotation: 239 |

|

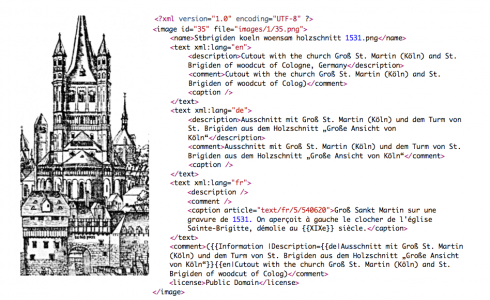

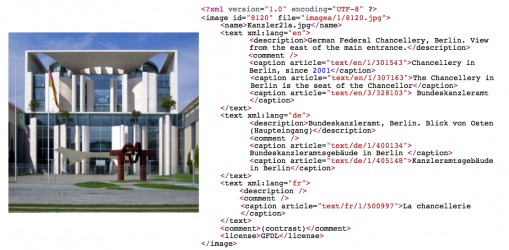

Two examples that illustrate the images in the collection and their metadata are provided below:

|

|

DOWNLOAD

The data are no longer available here; they can be downloaded from the Resources page for the ImageCLEF Wikipedia Image Retrieval Datasets: HERE. Search Engines:

|

| Evaluation Objectives |

The characteristics of the new Wikipedia collection allow for the investigation of the following objectives:

The results of Wikipedia Retrieval at ImageCLEF 2010 showed that the best multimedia retrieval approaches outperformed the text-based approaches. To promote research on multi-modal approaches, this year a subtask focused on late-fusion approaches is introduced. In this subtask, which will take place after the announcement of the main task results, all participants have access to text- and content-based runs submitted by other participants and are free to combine them in whatever way they consider suitable in order to obtain multi-modal runs. Similarly to 2010, a second focus will be the effectiveness of multi lingual approaches for multimedia document retrieval. |

| Topics |

|

The topics for ImageCLEF 2011 Wikipedia Retrieval task were developed based on the analysis of an image search engine's logs. DOWNLOAD

The topics are multimedia queries that can consist of a textual and a visual part. Concepts that might be needed to constrain the results should be added to the title field. An example topic in the appropriate format is the following: <topic> </topic> Therefore, the topics include the following fields:

|

| Retrieval Experiments | ||||||||||||||||

|

Experiments are performed as follows: the participants are given topics, these are used to create a query which is used to perform retrieval on the image collection. This process iterates (e.g. maybe involving relevance feedback) until they are satisfied with their runs. Participants might try different methods to increase the number of relevant in the top N rank positions (e.g., query expansion). Participants are free to experiment with whatever methods they wish for image retrieval, e.g., query expansion based on thesaurus lookup or relevance feedback, indexing and retrieval on only part of the image caption, different models of retrieval, and combining text and content-based methods for retrieval. Given the many different possible approaches which could be used to perform the ad-hoc retrieval, rather than list all of these we ask participants to indicate which of the following applies to each of their runs (we consider these the "main" dimensions which define the query for this ad-hoc task):

Annotation language: Comment: Topic language: Run type: Feedback or Query Expansion: Retrieval type (Modality): Topic field: |

| Submissions |

|

Participants can submit up to 20 system runs. The submission system is now open at the ImageCLEF registration system (select Runs > Submit a Run). Participants are required to submit ranked lists of (up to) the top 1000 images ranked in descending order of similarity (i.e. the highest nearer the top of the list). The format of submissions for this ad-hoc task is the TREC format. It can be found here. Please note that there should be at least 1 document entry in your results for each topic (i.e. if your system returns no results for a query then insert a dummy entry, e.g. 25 1 16019 0 4238 xyzT10af5 ). The reason for this is to make sure that all systems are compared with the same number of topics and relevant documents. Submissions not following the required format will not be evaluated. Information to be provided during submission

|

| Schedule |

A tentative schedule can be found here:

|

| Organisers |

|