- ImageCLEF 2024

- LifeCLEF 2024

- ImageCLEF 2023

- LifeCLEF 2023

- ImageCLEF 2022

- LifeCLEF2022

- ImageCLEF 2021

- LifeCLEF 2021

- ImageCLEF 2020

- LifeCLEF 2020

- ImageCLEF 2019

- LifeCLEF 2019

- ImageCLEF 2018

- LifeCLEF 2018

- ImageCLEF 2017

- LifeCLEF2017

- ImageCLEF 2016

- LifeCLEF 2016

- ImageCLEF 2015

- LifeCLEF 2015

- ImageCLEF 2014

- LifeCLEF 2014

- ImageCLEF 2013

- ImageCLEF 2012

- ImageCLEF 2011

- ImageCLEF 2010

- ImageCLEF 2009

- ImageCLEF 2008

- ImageCLEF 2007

- ImageCLEF 2006

- ImageCLEF 2005

- ImageCLEF 2004

- ImageCLEF 2003

- Publications

- Old resources

You are here

BirdCLEF 2016

| In a nutshell |

| A direct link to the best performing system (reference missing in the overview of the task): Audio Based Bird Species Identification using Deep Learning Techniques, CLEF 2016 working notes, Elias Sprengel, Martin Jaggi, Yannic Kilcher, Thomas Hofmann, LifeCLEF 2016 working notes, Evora, Portugal |

| A direct link to the overview of the task: LifeCLEF Bird Identification Task 2016: The arrival of Deep learning, CLEF 2016 working notes, Hervé Goëau, Hervé Glotin, Willem-Pier Vellinga, Robert Planqué, Alexis Joly, LifeCLEF 2016 working notes, Evora, Portugal |

Usage scenario

The 2016 bird identification task will share similar objectives and scenarios with the previous editions: audio-based bird species identification. The general public as well as professionals like park rangers, ecology consultants and of course ornithologists might be users of an automated bird identifying system, in the context of wider initiatives related to ecological surveillance, biodiversity conservation or taxonomy. Using audio records rather than pictures is justifiable since bird calls and songs have proven to be easier to collect and to discriminate better between species.

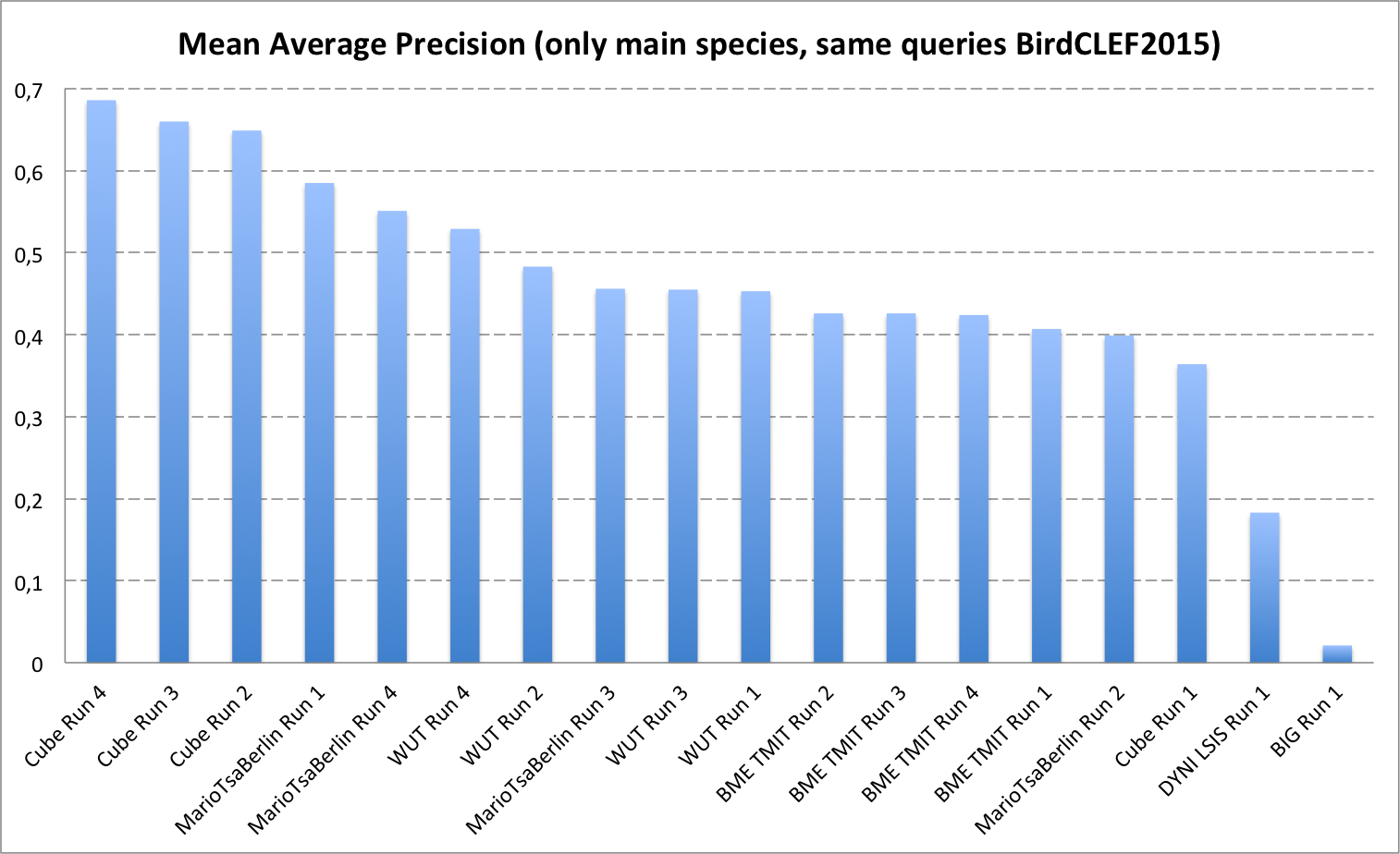

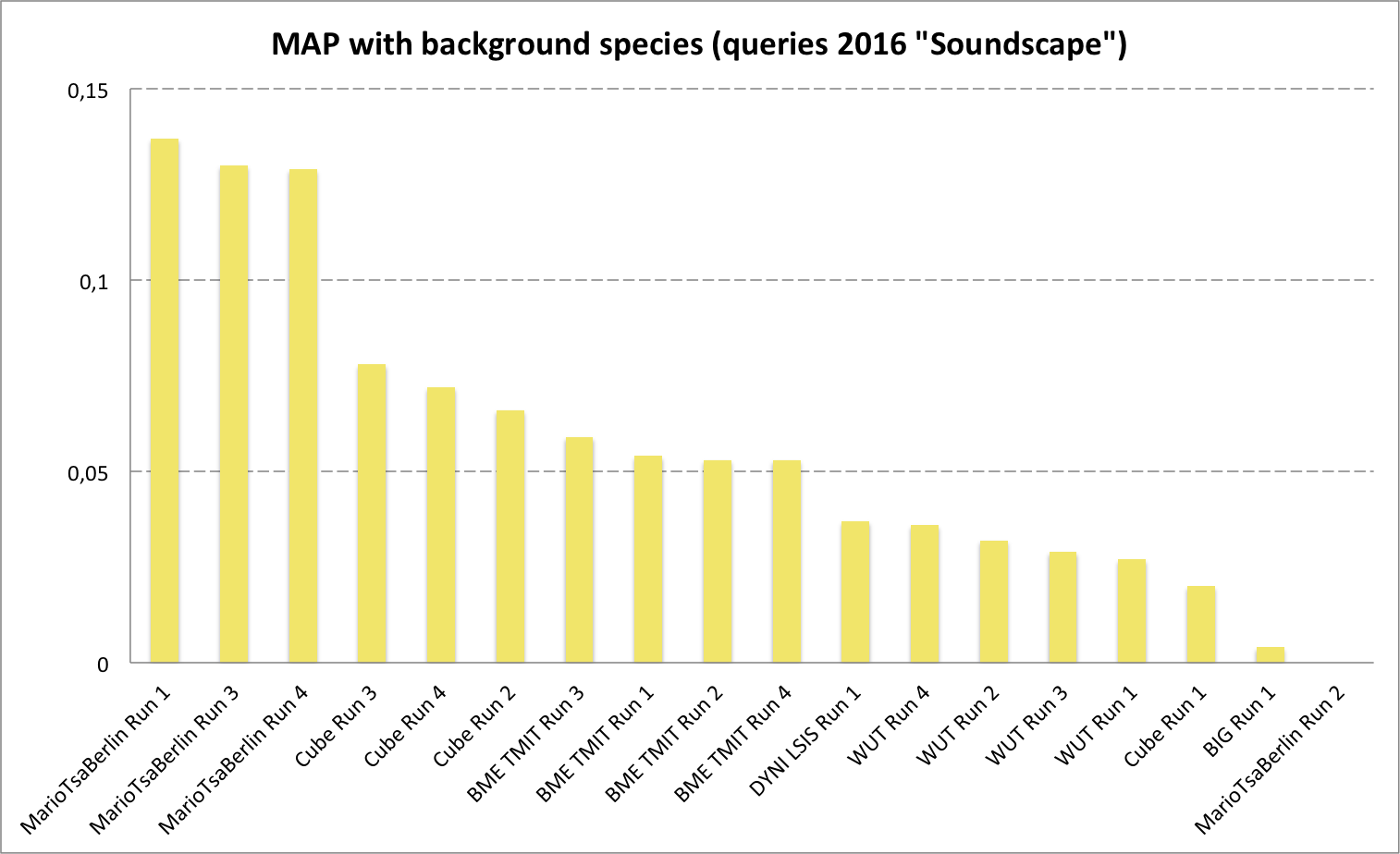

The main novelty of the task with respect to the two previous years is the inclusion of soundscape recordings in addition to the usual recordings that focus on a single foreground species. This new kind of query fits better to the (possibly crowdsourced) passive acoustic monitoring scenarios that could scale the amount of collected biodiversity records by several orders of magnitude. The organization of this task is supported by the Xeno-Canto foundation for nature sounds as well as the French projects Floris'Tic(INRIA, CIRAD, Tela Botanica) and SABIOD Mastodons.

Data Collection

As in the previous 2 years of the BirdCLEF challenge, the collection shared with the participants is built from the outstanding Xeno-canto collaborative database (http://www.xeno-canto.org/) that involves more than 2600 birders attempting to cover all of the acoustic diverity of the world's bird fauna (currently more than 275k audio records covering 9450 bird species recorded all around the world). The subset used for LifeCLEF 2016 is an extension of the one used in 2015. The training set will remain exactly the same, i.e. 24,607 audio recordings belonging to the 999 bird species most numerously represented in Xeno-canto in the union of Brazil, Colombia, Venezuela, Guyana, Suriname and French Guiana. The test set will be enriched compared to 2015. It will still contain the 8,596 recordings of the 2015 test set, but it will be enriched by a new set of soundscape recordings, i.e. recordings for which the recorder was not targeting a specific species and that might contain an arbitrary number of singing birds (contrary to the recordings in the 2015 set that all contain a dominant/foreground singing bird and some other ones in the background).

Audio records are associated to various metadata such as the type of sound (call, song, alarm, flight, etc.), the date and locality of the observations (from which rich statistics on species distribution can be derived), some textual comments of the authors, multilingual common names and collaborative quality ratings.

Task overview

The goal of the task is to identify all audible birds within the test recordings. The run file has to contain as much lines as the total number of predictions, with at least one prediction and a maximum of 999 predictions per test audio record (999 being the total number of species). Each prediction item (i.e. each line of the file) has to respect the following format:

< MediaId;ClassId;probability>

where probability is a real value in [0;1] decreasing with the confidence in the prediction.

Each participating group is allowed to submit up to 4 runs built from different methods. Semi-supervised, interactive or crowdsourced approaches are allowed but will be compared independently from fully automatic methods. Any human assistance in the processing of the test queries has therefore to be signaled in the submitted runs.

As a novelty this year, participants are allowed to use external training data but at the condition that (i) the experiment is entirely re-produceable, i.e. that the used external resource is clearly referenced and accessible to any other research group in the world, (ii) participants submit at least one run without external training data so that we can study the contribution of such resources, (iii) the additional ressource does not contain any of the test observations. It is in particular strictly forbidden to crawl training data from:

www.xeno-canto.org

For each submitted run, please give in the submission system a description of the run. A combobox will specify wether the run was performed fully automatically or with a human assistance in the processing of the queries. Then, a text area should contain a short description of the used method, for helping differentiating the different runs submitted by the same group. Optionally, you can add one or several bibtex reference(s) to publication(s) describing the method more in details. Participants have to indicate if they used a method based on

- only AUDIO

- only METADATA

- both AUDIO x METADA

The use of external training data or not also has to be mentioned for each run with a clear reference to the used data.

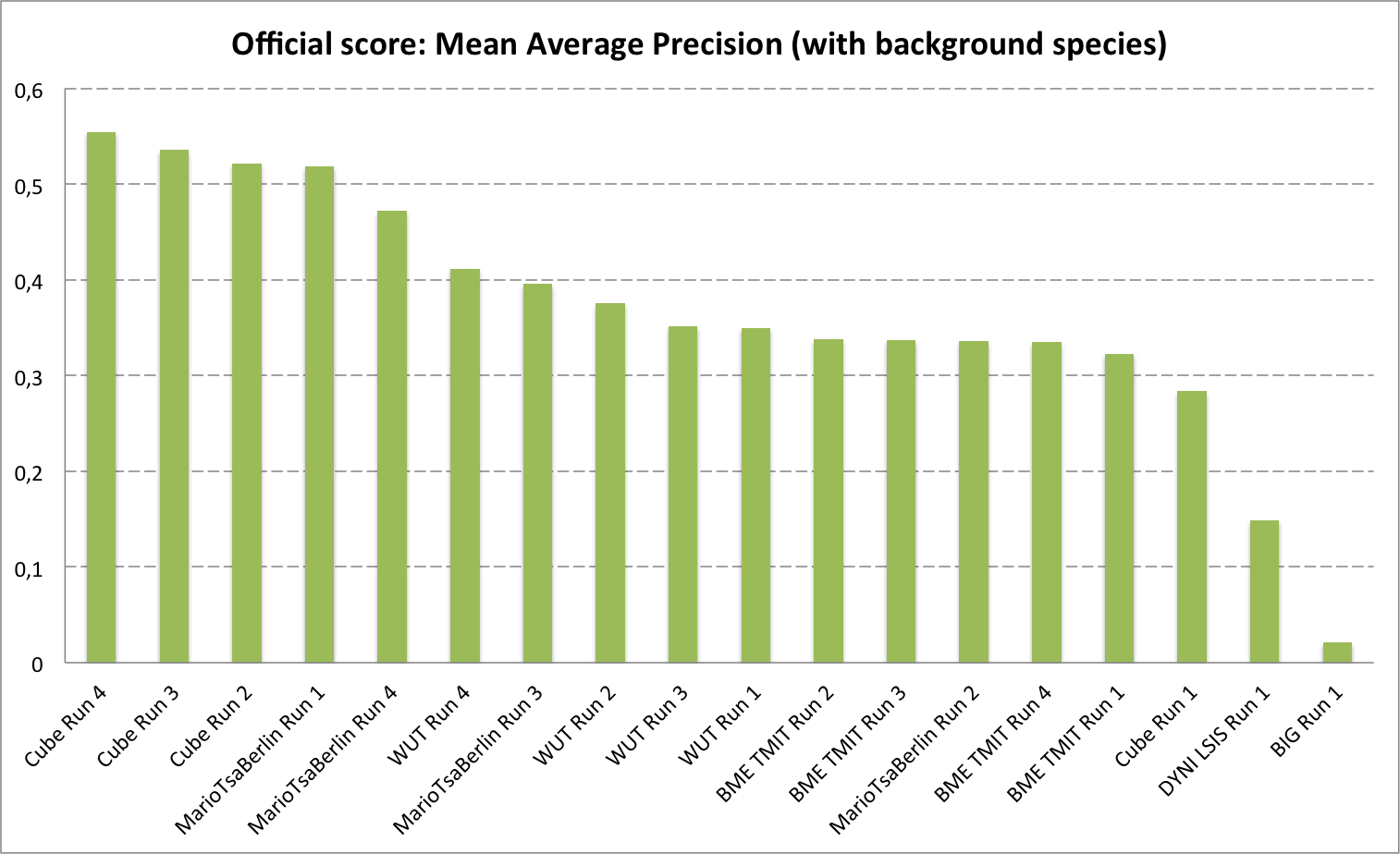

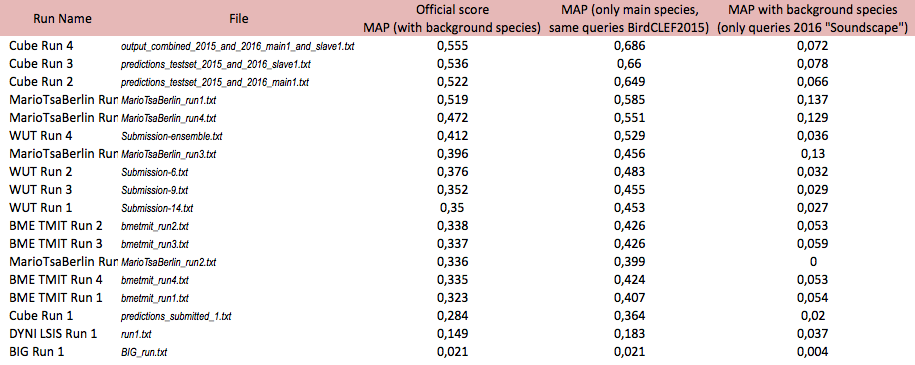

Metric

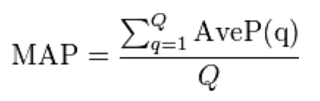

The used metric will be the mean Average Precision (mAP), considering each audio file of the test set as a query and computed as:

where Q is the number of test audio files and AveP(q) for a given test file q is computed as:

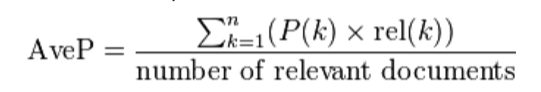

where k is the rank in the sequence of returned species, n is the total number of returned species, P(k) is the precision at cut-off k in the list and rel(k) is an indicator function equaling 1 if the item at rank k is a relevant species (i.e. one of the species in the ground truth).

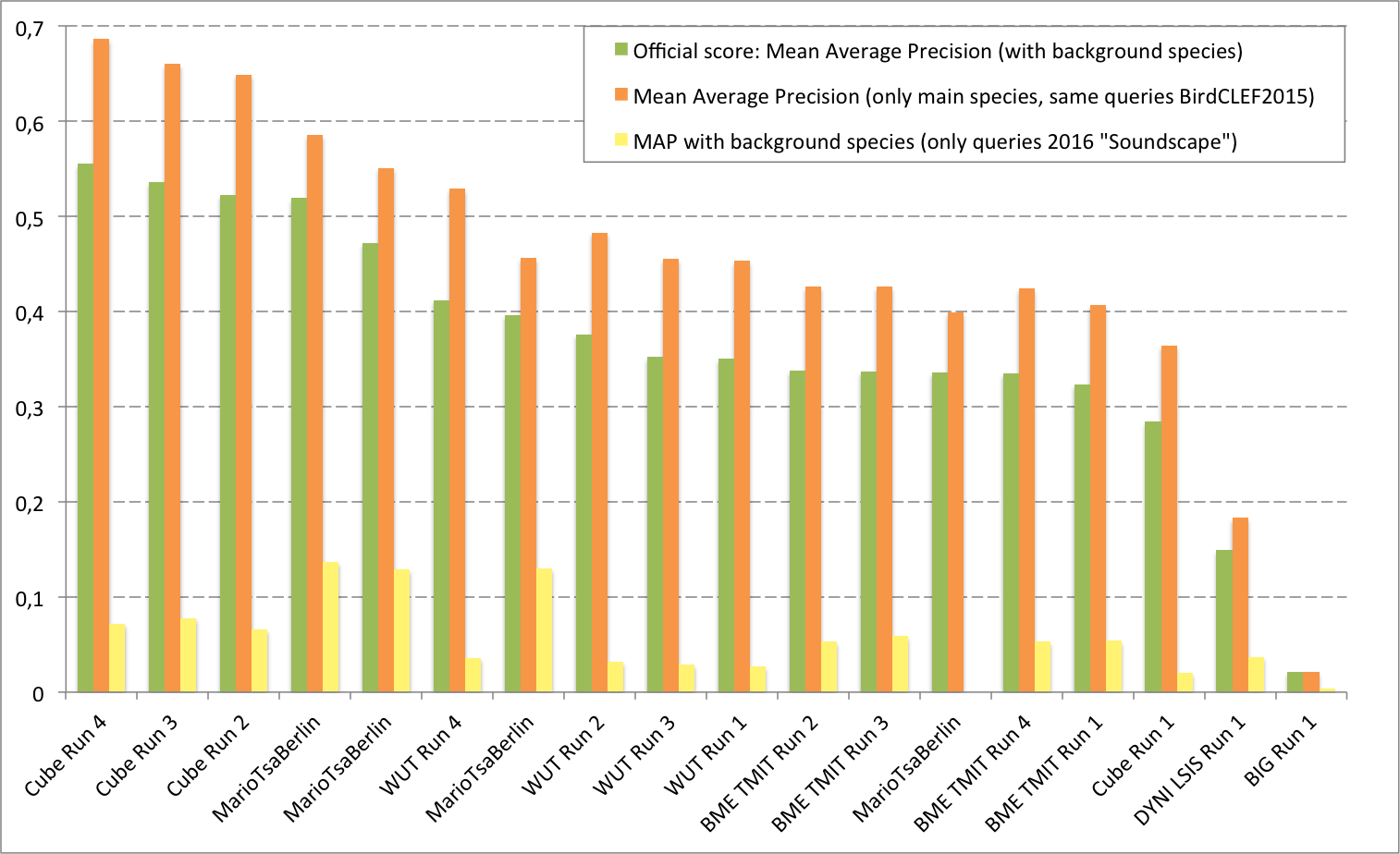

Results

A direct link to the best performing system (reference missing in the overview of the task):

Audio Based Bird Species Identification using Deep Learning Techniques, CLEF 2016 working notes, Elias Sprengel, Martin Jaggi, Yannic Kilcher, Thomas Hofmann, LifeCLEF 2016 working notes, Evora, Portugal

A direct link to the overview of the task:

LifeCLEF Bird Identification Task 2016: The arrival of Deep learning, CLEF 2016 working notes, Hervé Goëau, Hervé Glotin, Willem-Pier Vellinga, Robert Planqué, Alexis Joly, LifeCLEF 2016 working notes, Evora, Portugal

A total of 6 participating groups submitted 18 runs. Thanks to all of you for your efforts and your constructive feedbacks regarding the organization.

| Attachment | Size |

|---|---|

| 11.56 KB | |

| 20.58 KB | |

| 105.92 KB | |

| 307.66 KB | |

| 99.24 KB | |

| 122.08 KB | |

| 98.43 KB |